This is Niche Gamer Tech. In this column, we regularly cover tech and things related to the tech industry. Please leave feedback and let us know if there’s tech or a story you want us to cover!

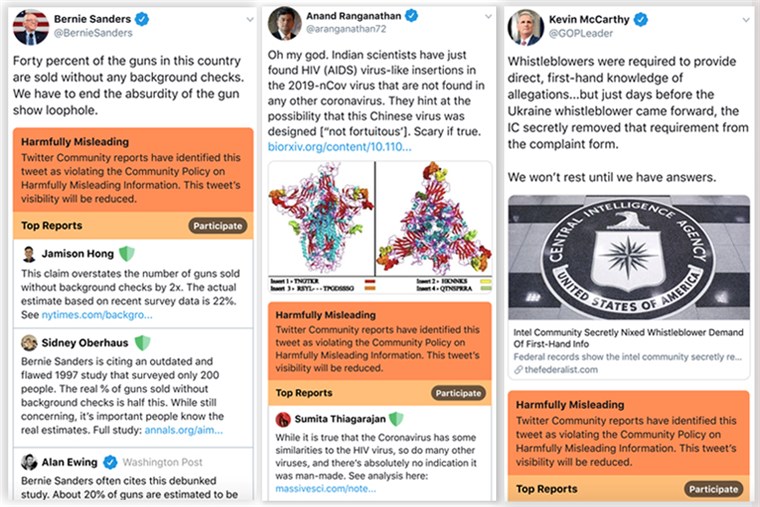

An image from an experimental version of Twitter has leaked onto social media, later confirmed by Twitter to be a potential way to flag and warn users of “harmfully misleading” tweets.

NBC News reports that Twitter confirmed an image (seen above) from a demonstration build of Twitter leaked. The experiment added labels to Tweets that were deemed (in NBC News’ words) “lies and misinformation posted by politicians and other public figures.”

The labels would provide “corrections” from (in NBC News’ words) “fact-checkers and journalists who are verified on the platform and possibly by other users who would participate in a new “community reports” feature.” This was compared to how Wikipedia verifies its community submitted articles.

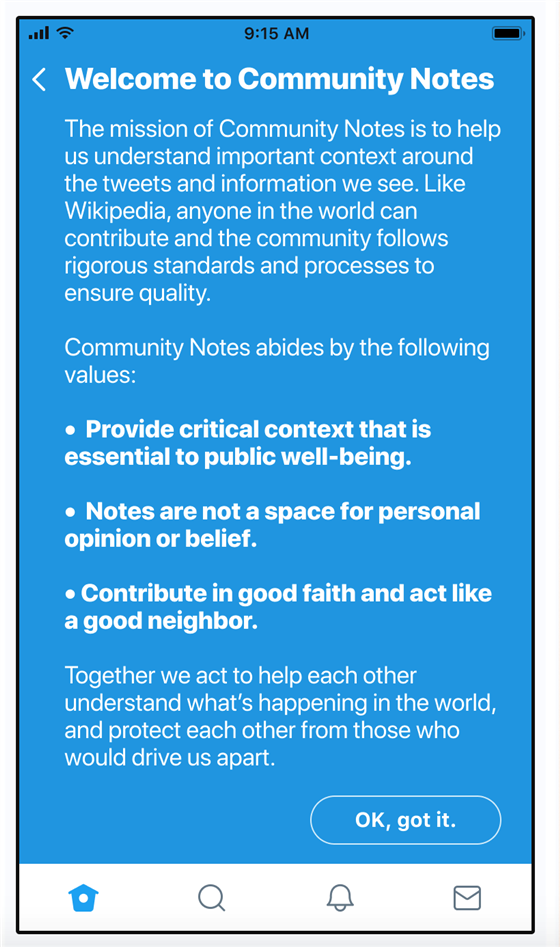

One iteration proposed users could earn points, and a “community badge” for moderating. In Twitter’s words, this would be for if they “contribute in good faith and act like a good neighbor,” and “provide critical context to help people understand information they see.”

This point-based system would allegedly (in NBC News’ words) “prevent trolls or political ideologues from becoming moderators if they differ too often from the broader community in what they mark as false or misleading.”

One demonstration (below) read “Together, we act to help each other understand what’s happening in the world, and protect each other from those who would drive us apart.”

The demo also reportedly asked members whether a tweet was likely or unlikely to be “harmfully misleading.” They are then asked how many others in the community would answer the same (on a scale of 1 to 100), and then elaborate on why the tweet is “harmfully misleading.” The demo also reportedly read “The more points you earn, the more your vote counts.”

A Twitter representative explained to NBC News it was “one possible iteration of a new policy to target misinformation,” though they did not have a date to roll out these features.

However, NBC News later stated that “The impending policy rollout comes as the 2020 election season is ramping up,” and “Twitter reiterated to NBC News that the community reporting feature is one of several possibilities that may be rolled out in the next several weeks.”

“We’re exploring a number of ways to address misinformation and provide more context for tweets on Twitter,” a Twitter representative told NBC News. “Misinformation is a critical issue and we will be testing many different ways to address it.”

Some conservatives feel that major online platforms are stifling their tweets and videos. With increasingly stricter policies coming to Google, YouTube, Facebook, and Twitter designed to combat hateful or abusive content, some feel the terms have been used to silence and ban conservatives.

One outlet, PragerU has even taken legal action against Google, though they have struggled to win a case.

Project Veritas have also gone undercover in large tech organizations, revealing that staff they interviewed had a poor view of conservatives, and were allegedly attempting to blacklist conservative websites and viewpoints.

One alleged anonymous Google employee even claimed that Google was “not an objective source of information. They are a highly biased political machine, that is bent on never letting someone like Donald Trump come to power again.”

This came via an alleged document wherein the following statement was made:

“In some cases it may be appropriate to take no action if the system accurately affects current reality, while in other cases it may be desirable to consider how we might help society reach a more equitable state via product intervention.”

Project Veritas also claimed that Twitter was utilizing its shadow banning to censor conservatives, based on comments made by Twitter staff in 2017 and 2018.

While staff such as Policy Manager Olinda Hassan described it as a means of “trying to get the shitty people to not show up,” and Software Engineer Steven Pierre said it was a way to “ban […] a way of talking,” it was Twitter Content Review Agent Mo Norai who was openly against President Trump.

Project Veritas claimed they learned that “in the past” Twitter employees would “manually ban or censor Pro-Trump or conservative content.” While Norai explained the banning process was “more discretion on your view point, I guess how you felt about a particular matter,” she elaborated further.

“Yeah, if they said this is: ‘Pro-Trump’ I don’t want it because it offends me, this, that. And I say I banned this whole thing, and it goes over here and they are like, ‘Oh you know what? I don’t like it too. You know what? Mo’s right, let’s go, let’s carry on, what’s next?'”

Norai also allegedly revealed that politically left-leaning content would come under less scrutiny. She further claimed this was an “unwritten rule” from senior management.

“A lot of unwritten rules, and being that we’re in San Francisco, we’re in California, very liberal, a very blue state. You had to be… I mean as a company you can’t really say it because it would make you look bad, but behind closed doors are lots of rules.”

” […] There was, I would say… Twitter was probably about 90% Anti-Trump, maybe 99% Anti-Trump.”

Direct Messaging Engineer Parnay Singh had also allegedly stated that shadowbanning algorithms focused on politically right-leaning content had been engineered. “Yeah you look for Trump, or America, and you have like five thousand keywords to describe a redneck. Then you look and parse all the messages, all the pictures, and then you look for stuff that matches that stuff.”

In July 2018, President Trump tweeted “Twitter “SHADOW BANNING” prominent Republicans. Not good. We will look into this discriminatory and illegal practice at once! Many complaints.”

In the aftermath of the previous US election, some claimed that President Trump had used “Russian Bots” to interfere with the election, generating positive support for himself, and spreading fake stories about Hillary Clinton.

While these claims have not been proven, some feel that in the run-up to the 2020 US election will cause another battleground online. Whether it be the belief of Russian Bots spreading fake information, or tech giants aiding the democrats by censoring Trump supporters.

The key question now relies on who will fact-check fact-checkers, how to appeal if a fact-check is wrong, and if Twitter- or even the Twitter community at large- can be trusted to be judge and jury on what the truth is.

In September 2019, DARPA (the Defense Advanced Research Projects Agency) reportedly began building technologies to detect fake images, videos, and news stories from “going viral”.

If the program is successful after four years of trials, it will expand to target all “malicious intent.” Tests include giving the program 500,000 stories- with 5,000 fakes among them.

One of the contributing factors to the birth of this technology was a video of House Speaker Nancy Pelosi, wherein the video has been slowed down in a way that she appeared drunk or disorientated. While outlets have shown deep concern, others have claimed the audio distortion in the video should have been enough to indicate it was fake.

Previously we also reported on how Twitter would allow users to control who could reply to their tweets.