A team of Japanese researchers based in Osaka have used Stable Diffusion AI to help generate images using MRI scans in the input. The results are surprising.

Researchers Yu Takagi and Shinji Nishimoto from the Graduate School of Frontier Biosciences based in Osaka University published their findings in a short document.

The research team presented subjects with a set of images and took fMRI (functional magnetic resonance imaging) scans of the subjects brain while they focused on the image.

The end image is a culmination of multiple parts, including an fMRI image output and Semantic Decoder which already existed.

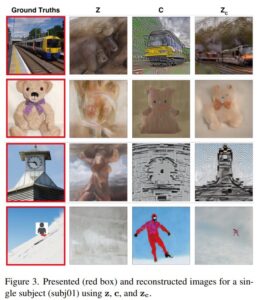

However, the addition of Stable Diffusion appears to bring the generated images more in line with the source as we can see below.

The leftmost image is the original source while the rightmost image is the culmination of Stable Diffusion, Semantic Decoder, and fMRI image generation. Column z represents the “latent vector” derived from imaging, while c represents “conditioning inputs” derived from text.

Despite not yet being peer reviewed, the paper already has some holes, at least one individual has pointed out that the use of Semantic Decoding is doing the heavy lifting. They further imply that the use of text as part of the prompt undermines the authenticity of the end result as a product of Stable Diffusion.

Semantic Decoding has existed since at least 2016 and you can read more about it here.

What do you think? Is Stable Diffusion on the cutting edge of visualizing thoughts? Or is it just an overstated bit of polish on already existing technology?

This is Niche Gamer Tech. In this column, we regularly cover tech and things related to the tech industry.